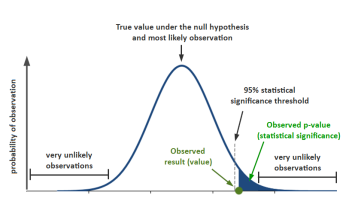

Hundreds if not thousands of books have been written about both p-values and confidence intervals (CIs) – two of the most widely used statistics in online controlled experiments. Yet, these concepts remain elusive to many otherwise well-trained researchers, including A/B testing practitioners. Misconceptions and misinterpretations abound despite great efforts from statistics educators and experimentation evangelists. […] Read more…