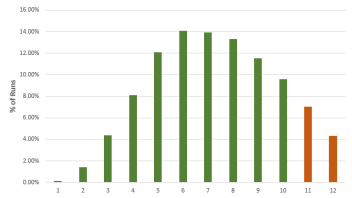

Running shorter tests is key to improving the efficiency of experimentation as it translates to smaller direct losses from testing inferior experiences and also less unrealized revenue due to late implementation of superior ones. Despite this, many practitioners are yet to start conducting tests at the frontier of efficiency. This article presents ways to shorten […] Read more…