It is high time to dispense with the myth that “statistically rigorous A/B tests are impractical”. In this piece I take the opportunity to bring down the many misconceptions that are typically underpinning the above claim.

Here are the four misconceptions regarding the role of scientific and statistical rigor in online A/B testing that I commonly encounter both in my consulting practice and in online discussions:

- Business experiments need not be scientifically rigorous

- Statistical rigor means testing with a 95% confidence threshold

- Proper statistics means an A/B test has to be evaluated just once

- Statistically rigorous A/B tests are impractical

Are superiors or colleagues subscribing to any of these myths? It may well be the major factor preventing one’s experimentation efforts from shining with their full potential. That is, if it is not derailing them altogether!

The following paragraphs take each of these down with precision strikes to the chin. Hopefully, this will give you a tool to effectively push against them so your A/B testing program is back on the right track. Sit back, relax in your front-row seat, and enjoy the show. It is taken as a given that you don’t mind the occasional splatter.

Business experiments need not be scientifically rigorous

What I hear most often from people afraid that scientific rigor may be detrimental to business objectives is something like the following:

“We are not a scientific research laboratory, so we do not require scientific rigor. We just want to see if this works or not. We are only interested in estimating what increase in conversion rate and revenue this change will deliver.”

True. A business is not a scientific laboratory. However, the unstated assumption is that the goals of a business test are somehow different than those of a scientific inquiry.

Such sentiments are often reinforced by or latched onto by proponents of quasi-Bayesian approaches who might use “scientific rigor” as almost a slander when speaking of “old ways of doing experimentation” or “frequentist statistics”. This may have the unfortunate effect of fortifying the understanding that “scientific” and especially “scientifically rigorous” is not something that applies to A/B tests which aim to address business questions.

Those who espouse such a view seem oblivious to the incredible overlap between the history of scientific experimentation and business experimentation. Here are a few notable examples:

- Student (1908), “The Probable Error of a Mean” was arguably one the papers laying the groundwork for statistical experimentation. It was written by William S. Gosset, a.k.a. “Student” while he was employed as a Head Experimental Brewer at Guinness. He spent his entire 38-year career there. “The self-trained Gosset developed new statistical methods – both in the brewery and on the farm – now central to the design of experiments, to proper use of significance testing on repeated trials, and to analysis of economic significance” (Wikipedia)

- Walter A. Shewhart laid the groundwork for statistical methods in industrial quality control. His entire career was split between two companies: “Western Electric Company” and “Bell Telephone Laboratories”.

- Ronald A. Fisher wrote his seminal works on design of experiments during his 14 years at Rothamsted Experimental Station or shortly thereafter. He was employed as a statistician tasked with devising and conducting experiments to increase crop yields. While not strictly a business venture, this is applied research with direct and measurable impact, with related costs and risks, including the cost of not acting soon enough, much like the one undertaken in business R&D departments.

- Abraham Wald is notable for his ground-breaking contributions to sequential sampling and decision theory. While he was an academic, he engaged in a lot of applied work for the army where it saw great results when applied (they were classified until the end of WWII).

- Many modern-day advances in statistics and design of experiments such as those in sequential estimation, adaptive experiments, and others, come from professionals in business employment, e.g. those in the R&D departments of major pharmaceutical companies, manufacturing facilities, or large tech corporations.

There has been cross-pollination between business R&D specialists and the scientific community with regards to experimental methodology all along.

While the above speaks volumes by itself, there may indeed be other reasons why so much of the scientific method has been developed with practical business applications in mind. However, the intro promised blows to the chin so here they are.

How scientific rigor helps achieve business goals

The goal of a business experiment (“We just want to see if this works or not. […] to estimate what increase […] this change will deliver”) is at its core the same as that of a scientific one: to establish a causal link between an action and its outcome(s).

This goal cannot be achieved without a scientifically rigorous experiment. It has to follow all the best practices for designing an experiment and for its statistical analysis. And even then the outcomes come with certain caveats.

Doing anything else is more or less a random shot in the dark. If you lack scientific methodology you might have a hit and not know it, or miss and not know it. Or hit and think you’ve missed, and vice-versa. Or, and this is likely most common, not have any effect while thinking that you do. Early scientists did a lot of this, and so did many businesses, with many horror stories on both fronts to attest to the effects.

Using observational statistical methods for assessing the impact of a change may fair better, depending on the circumstances and how good they are. However, they are not a replacement for experimentation. No matter how strong, a correlation does not entail causation.

Therefore, to establish that action X caused favorable outcome Y, one needs a proper experiment, a.k.a. A/B test. “Proper” refers to all phases of it: design, execution, and analysis.

For example, if one performs a randomized experiment, but fails to apply statistically rigorous methods, they have failed to perform a proper test. If the statistics are lacking, one may easily be fooled by random variations inherent to many real-life processes, even in an otherwise good experiment. Statistical methods allow the calculation of the probability of how likely the outcome, or an outcome greater than the observed was to happen, even if nothing of interest was going on. This translates into claims that can and cannot be rejected by the data at hand.

There is no way around it. This is true even if one does not like it. I too sometimes wish I was able to look at before and after numbers in Google Analytics and declare “this works” or “this change resulted in X% increase in metric Y”. But I know doing so may be worse than not looking at any numbers at all. That is why I would not base any decision with significant business impact on such a poor method and would conduct a rigorous A/B test instead.

Statistical rigor means testing with a 95% confidence threshold

A superficial understanding of scientific testing and dumbed-down statistics texts can leave one with the impression that 95% is akin to a magic number. It results in equating the use of a 95% confidence threshold to running a rigorous test. In this view, thresholds lower than that are not considered rigorous enough, while thresholds higher than that are rarely entertained.

Perhaps the reasoning goes something like this:

“If it is good enough for scientists, it surely is good enough for a business, right?”

Wrong. And no multiple levels to boot.

Most of the sciences do not have the blessing of being able to work with short timeframes, with known stakeholders, and with risks and rewards that may be calculated or at least estimated with decent accuracy. For these reasons minimum thresholds for scientific evidence have been de-facto accepted in scientific research.

Even so, 95% confidence (or a p-value threshold of 0.05) is just the lowest of standards, and it is only used in a few select disciplines and by some regulatory agencies. Others have much more stringent standards. For example, in physics a discovery is announced only after an observation clearing a threshold of 3×10−7. GWAS (Genome-wide association studies) have a threshold of 5×10−8. Both of these are many times more stringent than 0.05 which is just 5×10-2 in scientific notation.

So, not only is 0.05 not a golden standard, but a mere minimum threshold, it also varies from discipline to discipline, reflecting the different risk-reward calculations in each. This should be a good blow to the chin for the myth of the 95% confidence threshold. Let’s give it a few more hits for good measure.

Ultimately, the confidence threshold has nothing to do with the scientific or statistical rigor of an A/B test. The threshold should be chosen so that it results in a test outcome that, if it is statistically significant at that level, would be useful for guiding conclusions and actions. It simply reflects the maximum level of uncertainty at which the outcome would still warrant the rejection of the null hypothesis. The trustworthiness of the test itself comes from applying the right methods in the correct way.

In practice, each A/B test should be planned by taking into account the probabilistic risks and rewards involved. Such an approach results in a balance between type I and type II errors which maximizes the return on investment from testing. If a threshold of 95% seems too high for some test, it may well be so. For other, high-stakes scenarios, I’d argue that even 99% might be way too low a bar.

For a deeper dive on the matter of optimum ROI tests see this glossary entry and the related articles in it. If you want to see it in action, simply use Analytics Toolkit’s test creation wizard.

See this in action

The all-in-one A/B testing statistics solution

Proper statistics means an A/B test has to be evaluated only once

According to this myth, to run a test with proper statistics forbids peeking at the results and most certainly forbids “peeking with intent to stop”. According to such a view, one should limit themselves to just a single data evaluation at a predetermined sample size or time.

Unfortunately this misconception is still widely popular. It is even propagated at some university departments that deal with the matter. It is no wonder that if the above is believed to be true, one would also consider many A/B tests impractical.

This misconception stems from the fact that the most basic statistical tests indeed require a predetermined sample size (or duration) for their statistical guarantees to be meaningful. Applying these basic tests to situations which they are not meant to handle is surely a grave blow to the integrity of an A/B test.

Such basic tests have their time and place. However, in most business scenarios one benefits significantly from the ability to evaluate data at certain intervals and make decisions based on results which are overly positive or too unpromising, not to mention overly harmful. This is why sequential monitoring test designs have been developed, starting with the aforementioned A. Wald as early as the 1940s. They have been much refined and improved since. Sequential tests are now widely used both in business and in the sciences with a recent widely popularized example being the COVID-19 vaccine trials.

Powerful, flexible, and practical, sequential monitoring allows you to monitor the results as the data gathers, and to make decisions without compromising the statistical rigor of a test. You can experience them yourself at Analytics Toolkit’s A/B testing hub.

Statistically rigorous A/B tests are impractical

That is a heavy-hitter of a statement. However, its weakness becomes apparent once it is realized that its gargantuan hands are supported entirely by the myths just debunked. Without them, they fall limb, and the monster which would otherwise almost surely put an end to any A/B testing program, loses most if not all of its power.

Most of the impracticality is perceived, not actual, or is the result of a misguided view on what the role of A/B testing is. These perceived issues can be addressed one by one, as this article just did. Uncovering false assumptions and mistaken views with regard to certain practical matters is how to debunk the myth.

Explaining the role of A/B testing is always a good starting point. It is a device for controlling business risk, attributing effects, and estimating their size. Simple as that.

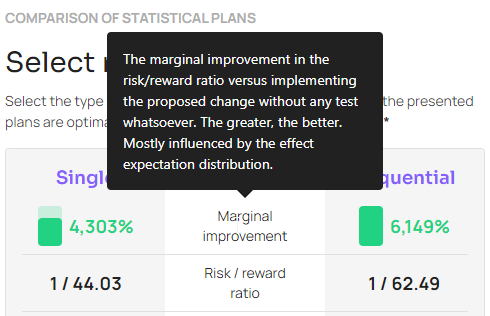

It will also be helpful to stress the fact that each test can and should be designed so it brings a marginal improvement in terms of business ROI against a scenario in which the change is implemented without testing.

Showing a clear benefit from testing versus implementing straight away is by far the best tool to convince skeptics.

“I know proper testing is practical, but it is hard to get buy-in”

Finally, I sometimes hear the statements like this one, even from people who are mostly aware of what value rigorous experiments can bring to a business:

“A client or a boss won’t accept a scientifically rigorous A/B test, were I to propose one.”

To the above I say: “Not. True.”.

It might not be the easiest sell, but one has to try and will often be surprised at how effective the truth is. Yes, with a tougher audience you might need to resort to the occasional trick like running an A/A test while calling it an A/B test all up until it ends. When you finally reveal your sleight of hand, it may be a “magic trick” which stays with your boss, colleague, or client, for life. In other cases you might need to do a meta-analysis of previous efforts before you can demonstrate just how wrong the results of less-scientific efforts were. And there might be a metaphorical bloody nose or two, and perhaps even someone sobbing quietly in a bathroom. It is all for the better.

The role of the scientific method and statistical rigor in A/B testing

The role of A/B testing is to control business risk, to properly attribute effects, and to help estimate their size in the face of variability. It can be viewed as a last line of defense. A shield against bad decisions that look good to most, but also as a way to demonstrate a change is beneficial even against widespread opposition.

A/B tests would not have the above capacity if they are not conducted in a rigorous way.

In that sense, scientifically rigorous methodology and statistically rigorous tests are at the core of A/B testing. They are the very reason why one sets out to test, in the first place.

That rigor is what sets A/B testing apart from any other approach for estimating the impact of a change or intervention. Scientific and statistical rigor are what makes it excellent at managing business risk. These are precisely the qualities which give experiments the power to change minds.

And why are in this line of business if not to change minds by uncovering objective reality?