Most of the time when discussing A/B testing, regardless of context, we discuss costs such as the expense of running an experimentation program, of shipping ‘winners’ to production. Only rarely do I see references to the less obvious, but usually more important costs in terms of opportunity cost (incurred during testing) and the cost of shipping without testing at all.

This and other costs I’ve reviewed in detail in my book “Statistical Methods in Online A/B Testing” and to a lesser extent in articles such as “Costs and Benefits of A/B Testing“, “Risk vs. Reward in A/B Testing“, and “Inherent Costs of A/B Testing“. I will not go over all of these here so we can focus on the case I’d like to present to you.

A Mandatory Update

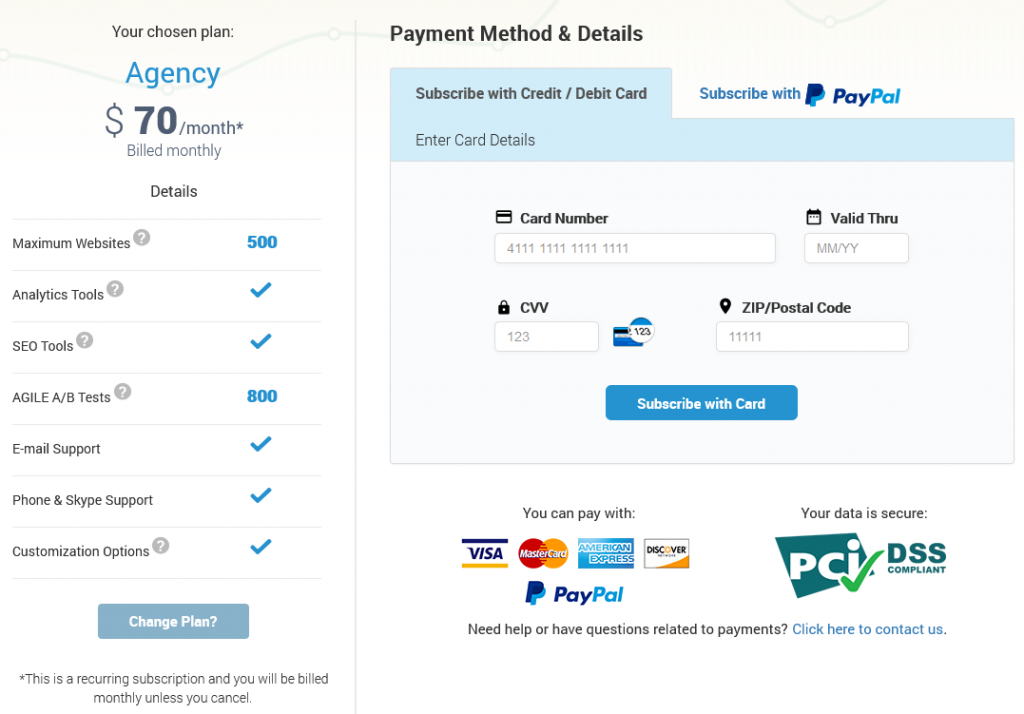

The case I’m going to lay out refers to this very website, Analytics-Toolkit.com. As you are possibly aware, we are a SaaS business offering Google Analytics automation tools and statistical tools for design and analysis of online controlled experiments, a.k.a. A/B tests. Our clients are mostly businesses with the occasional CRO or Google Analytics consultant or freelancer.

The above has direct relevance to the case, since this highly specialized business customer base ensures that we have not had a single case of a legitimate credit card chargeback issued against us (a couple were made in error, and subsequently retracted). Even stolen CC transactions are not an issue for a business like ours. If you’ve stolen a credit card, spending its limit on a highly specialized software tool with zero potential for malicious use is rather unlikely, to say the least.

Despite this, following new credit card processing standard and regulations going into effect in 2019/2020 we had to make the choice to either migrate our payment systems to supporting 3DSecure v.2 or to risk a number of our paying customers being literally unable to pay us. It was essentially a no-choice scenario for us.

For those tempted by the details, we were complying with Europe’s Payment Services Directive II (PSD2) and particularly the Strong Customer Authentication (SCA) requirement.

To Test or Not to Test?

As you can see, the decision to implement this was practically made beforehand. We need to comply to the regulation, despite it providing zero benefits to our particular customer base.

Technically the changes we had to make weren’t that many and so the amount of work accompanying this was low. It actually took us about a day of work to deploy on staging, QA and be ready to release and QA on production. It would be equally easy to roll back the change if we had detected any serious issues.

I should mention that we have a system in place for measuring and reporting payment flow errors. Therefore, we figured that if there was an issue with the 3DSecure implementation we would easily identify it using these error reports. A fix would then be on the way fairly quickly. A temporary rollback is also a cheap option, if it becomes necessary.

Considering what kind of test we could run and how long it would take to get the necessary sample size, we had to consider the type of hypothesis we would use. By all measures A/B testing the new 3DSecure flow would fall into an ‘easy decision’ scenario if we are to use the 2-type classification I first defined in my article “The Case for Non-Inferiority A/B Tests“. It would be a great candidate for a non-inferiority hypothesis to be used. In such a test the variant is to be implemented if it is not inferior to the control. Essentially the test would protect against the risk of unintended bugs that might lead to a decrease in conversions and revenue.

However, the cost of running such a test were not insignificant since our existing (custom) A/B testing infrastructure only supported front-end tests. Modifying it to support splitting traffic into two paths – one towards the old payment flow (no 3DSecure) and one towards the new payment flow (with 3Dsecure) would take additional time and resources to develop and QA.

Given the assurance offered by our payment error tracking system and the low costs involved in fixing any issues discovered post factum, the decision was made to not test the new payment flow.

Naturally, the next logical question is – what’s the worst that could happen, and how likely it is that it would and would remain undetected for long?

What’s the Worst That Could Happen?

Well, naturally, we could lose all new business if there was a fatal flow in our 3DSecure integration. A very unlikely scenario, you would say, especially given the rigorous quality assurance process this release went through.

However, some days went by and we got a complaint from a user that they are unable to pay. They were reporting that the process got stuck right about where the 3DS prompt would be. Given that the 3DS prompt is a pop-up and knowing how overly-aggressive certain ad-blockers can be (and issue further exacerbated by having ‘analytics’ in the domain name) we assumed the problem was with the users’ browser configuration and tried to pinpoint the issue by working with them, unsuccessfully.

Several more days go by and we get a similar complaint from another user. In the meantime new subscriptions are still flowing in, and given the fairly low rate of such events, it was difficult to tell if the rate is lower than normal or not. A day or two later – a third complaint came in and at this point we were quite anxious. We tried working with our payment processing gateway provider to see if they saw any issues with our setup. We checked the payment error logs to find no new errors there, but that was not surprising as these are extremely rare.

Finally we went over our setup once more and found the culprit. It was a bug in the payment error tracking system which was actually interfering with the 3DSecure prompt, blocking it from appearing. Since it was the last piece to be attached to the process, it evaded our QA process.

Ironically, what was supposed to alert us to crucial errors was causing these same errors.

If you are curious, the transactions still going through after the 3DS update were from new subscriptions who paid in ways other than credit card.

The Cost of Not A/B Testing

So, you might be asking, what was the cost to Analytics-Toolkit.com for skipping the A/B test in the above scenario. As with other such situations, one cannot say for sure, since we don’t have proper data from a controlled experiment. However, we know that other than the three potential customers who contacted us (which we manually set up with a subscription), we did not have any other new payment subscriptions via credit cards for a period of a couple of weeks.

While I’m not at pleasure to disclose Lifetime Value numbers (LTV), everyone can do a bit of math using our pricing plans and see that LTV per customer over, say, 2 years, ranges from $360 to $1680. So, while we don’t know exactly how many customers we lost, we can see that even with a low number the cost in terms of lost revenue is significant for a small business.

All our estimates show that taking the risk of shipping without testing was underestimated and it was not worth it. A non-inferiority test to rule out at least severe issues like this one would have resulted in a positive risk/reward ratio.

Should You Test Something That Will be Implemented Anyways?

Asked this way, the question doesn’t make much sense. Obviously, you shouldn’t as it is just an unnecessary overhead.

However, when phrased properly, it becomes:

“Should we test a particular implementation of a general idea, if the idea is going forward anyways?”

This is a vastly different question since the same idea can be implemented in more than one way, some good, many not so much.

Obviously, the ‘idea’ or ‘direction’ was decided on in advance in this case – we were going to implement 3DSecure no matter what. We had to. However, this doesn’t mean we shouldn’t have tested our particular implementation to make sure it is not harmful to our business.

So, the fact that the general direction is determined and immutable doesn’t negate the need to perform an A/B test, if such a test can be justified on the basis of a positive ratio between the summed risks and rewards.

A/B Testing is Not About ‘Wins’

I think the above case study on the risks of not A/B testing is yet another one supporting my argument that A/B testing is not equivalent to CRO (Conversion Rate Optimization). I believe this confusion is what has lead many to regard A/B tests as ‘winner verification machines’ of sorts. On the contrary, an A/B test is something you should perform even if what’s tested is not expected to produce positive results for the business.

As I’ve argued many times before, A/B testing is all about risk management and data-driven decisions. Shifting the focus from producing ‘wins’ to managing the business risk associated with certain decisions and estimating the potential effect of certain actions will shift the way A/B testing is viewed and implemented within your organization. And this will be all for the better.