The problem most-often faced by owners of websites who want to take a scientific approach to improving them by using A/B testing is that they might have relatively small revenue. Thus, when the ROI calculation for the A/B test is done it might turn out that it is economically unfeasible to test.

In some cases, the difference between a ROI-positive A/B test and a ROI-negative A/B test can be the cost of testing software. It is certainly not the first thing in a list of costs to A/B test, but depending on expertise and know-how costs it can be right up there.

For this reason, some websites decide to develop simple homegrown testing platforms for delivering A/B tests and then use third-party or homegrown statistical tools for statistical planning and analysis – this is what we do at Analytics-Toolkit.com, for example.

Others don’t have the expertise and resources to develop a test delivery platform and thus turn to free alternatives, among which is Google Optimize, the successor of Google Website Optimizer and Google Analytics Content Experiments. It allows one to quickly set up A/B and multivariate tests, introduce changes to variants using a WYSIWYG editor, and has a Bayesian A/B testing statistical engine. We have some criticism about the presentation and claims made about that engine, as you can learn more about by following the previous link.

Starting today there is another alternative for those who want to use an advanced frequentist statistical engine or who, like me, can’t understand how to interpret the results of a statistical black box:

AGILE A/B testing with Google Optimize data

We expanded our A/B Testing Calculator with the ability to pull data from your Google Optimize experiments. This means you can easily use the powerful AGILE testing method with data gathered based on Google Optimize experiments.

The AGILE A/B testing calculator is based on a frequentist approach that allows sequential evaluation of the test data and flexibility in doing so in contrast to simple fixed-sample size solutions which are subject to significant inflation of the type I (false positive) error due to peeking. This results in 20-80% faster A/B tests.

The efficiency of AGILE testing is due to stopping early if the test’s data suggests a high probability that the outcome will be better than or worse than the minimum detectable effect the test is planned for. This is achieved through statistically-rigorous efficacy and futility stopping boundaries. Our tool supports multivariate tests (A/B/C/n) as well, using the appropriate statistical adjustments.

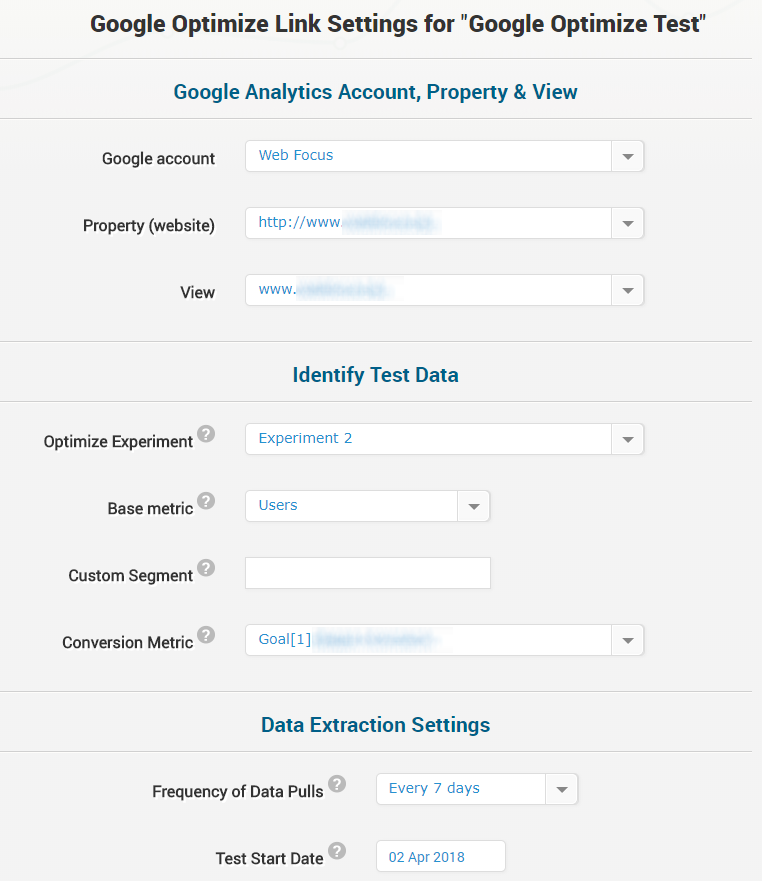

In order to link your Google Optimize data to the AGILE A/B testing calculator you need to link your Google Analytics account the same way our existing customers do in order to use most of our tools. There are no additional steps needed and no additional authorizations required. Naturally, you will need to specify the Google Analytics view to which the Optimize Experiment is linked, then just select the Experiment name from a drop-down:

The conversion metric and test start date will be filled automatically for you, but you can change both, if you want, though changing the date is not recommended in most cases. There is no need to enter data about control and variant names or IDs.

The above screen might be familiar to users who are currently running tests using Google Analytics custom dimensions to automatically feed data to our A/B testing calculator. It is essentially the same, but simplified – it will be easy for you to transition to testing via Google Optimize if you need to do so in the future.

Advantages in using AGILE with Google Optimize

Unlike Google Optimize where all metrics are session-based, by using our A/B testing calculator you can select to use user-based metrics: users and user-based conversion rate, or transaction rate / purchase rate. This is much more suitable for conversion rate optimization for e-commerce sites where A/B tests generally aim at improving the user conversion rate and consequently the average revenue per user.

The results are easier to interpret since the tool uses established frequentist approaches in calculating statistical significance (p-values) and confidence intervals, and everything happens within the NHST framework of minimum assumptions about the data-generating process.

Limitations in using Google Optimize Data with the AGILE testing method

The limitations you need to be aware of are:

- you should split the traffic evenly across the variants and the control for the entire duration of the test. This can be achieved by using equal weights and serving Google Optimize server side, which is something you would need to do anyways in order to test sitewide changes. (Updated Dec 2019: This is now the default behavior of Google Optimize with client-side implementations.)

- you can’t pause test variants during the A/B testing process.

- the maximum variants you can test against a control is 12 (limitation of the software, not the method).

Violating the first two will make your data incompatible with the AGILE A/B testing approach.

These limitations will likely not result in worse ROI from your A/B tests. As argued in several papers on adaptive sequential design, such as “On the inefficiency of the adaptive design for monitoring clinical trials” by Tsiatis & Mehta (2003) and “Worth adapting? Revisiting the usefulness of outcome-adaptive randomization” by Lee, Chen & Yin (2012) non-adaptive designs are actually always (slightly) more efficient than adaptive designs.

If you have any questions regarding how to link your Google Optimize account with our AGILE A/B testing calculator, be sure to reach out to us through our contact us page.