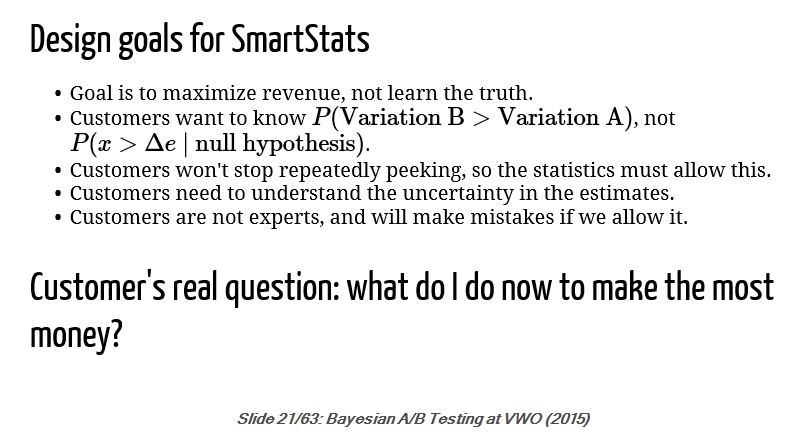

As someone who spent a good deal of time on trying to figure out how to run A/B tests properly and efficiently, I was intrigued to find a slide from a presentation by VWO®’s data scientist Chris Stucchio, where he goes over the main reasons that caused him and the VWO® team to consider and finally adopt a Bayesian AB testing approach they call “SmartStats”®.

Here is a screenshot of that slide (taken Sep 26, 2016):

In this post I’ll critically discuss the statements from the above slide (slide 21) from his 2015 presentation titled “Bayesian A/B Testing at VWO”. While the post is structured as a response to claims made by Mr. Stucchio, such statements are not at all unique to him so I believe the discussion below will be an interesting read for anyone considering Bayesian AB testing for similar reasons.

I’ll tackle these in the order they are presented, minding the context of the whole presentation of frequentist versus Bayesian approaches to statistical inference in the context of AB tests.

Claim #1: The Goal of A/B Testing is Revenue, not Truth

Goal is to maximize revenue, not learn the truth

This is essentially the same as the end statement on the slide: that the real question is “What do I do now to make the most money?”, so I’ll be effectively responding to both at the same time. Of course, such a statement is hinting that Bayesian methods can help you maximize revenue (about which you care), while frequentist methods are somehow “only” helping you discover “the truth” (about which you apparently shouldn’t care).

If we extend the logic of the original claim a bit further, then the actual goal is not really to maximize revenue, but to maximize profit so one is able to buy a new house, send the kids to a private school and spend a month in the tropics. If we extend it a bit further, then the goals is in fact not even that, but to maximize ones’ own happiness. As you can easily see such logic is totally bankrupt in addressing the issue, as it is a sort of slippery slope argument. On top of that I see it as a misrepresentation of what frequentist methods are devised for and can be used for, since there appears to be an implication that they lack capacity to assist in revenue maximization.

Of course, the “end” goal is to max revenue and other business metrics, but there is no better way to do that in the realm of A/B testing than to discover objective truths about the world the business operates in, as best as practically possible.

In adopting the methods and tools we use to achieve this goal one always considers the limited resources at his disposal and the inevitable uncertainties that result from inferences based on sampling, as well as the trade-off between level of certainty and the utility of being that certain. This is as true for frequentist methods as it is for any other theory and practice of statistical inference of any value, including Bayesian theory.

Establishing “the truth” is not a goal on its own right or some kind of an ultimate goal in a frequentist framework. As in any other human activity there are always particular goals we are interested in pursuing and thus – behavioristic rationale. This can in theory be expressed in terms of a utility maximizing function or utility function for short.

However, utility functions are complex, rarely even approximately formalized in practice and, ultimately, subjective, therefore it is deemed extremely important in frequentist statistics to be able to share the data and its characterizing statistics without mixing it with subjective input in terms of utility or prior beliefs. This allows for easy comparison, communication and ultimately understanding of what the data input warrants about a particular hypothesis at hand.

This is not so in many Bayesian approaches where utility or prior beliefs are mixed with the data and cause all kinds of issues, even when the belief is about lack of knowledge (“I know nothing”), as we’ll see when discussing Claim #2 below. Given that no two A/B tests are alike, that costs and thus the potential net benefits, ability and ease to reverse a decision and other important considerations differ greatly between experiments, it is important that statistical tools should not try to act as or present themselves as revenue-maximizing decision-making tools, especially when user input with regards to decision-making is minimal or non-existent.

No AB testing analysis tool can give a straight answer to the question “What do I do now to make the most money?” as this is a matter of decision-making – a problem far more complex than reporting statistics from an A/B experiment. It is in fact a huge part of the job of any A/B testing specialist, be it in-house or as a part of a conversion rate optimization agency or division.

My view is that a statistical tool’s purpose should be to help the user quantify a given parameter and the uncertainty, associated with the measure of its estimation, while leaving the interpretation and, most importantly, the decision-making on what is necessary to maximize revenue, to the end user. The first is a truth or fact about the world, the second is a revenue-maximization decision based on that truth or fact.

Claim #2: Frequentist Statistics Don’t Give Answers to The Question of Interest, Bayesian Statistics Do

Customers want to know P(Variation A > Variation B), not P(x > Δe | null hypothesis)

It seems the first part is supposedly what one learns using the Bayesian approach – a posterior probability of two hypothesis while the negated part is supposed to correspond to a frequentist p-value, providing an error probability regarding an observed test outcome.

I agree that the first part represents what many people want to know, but only if we understand probability here in very broad terms, e.g. as an interchangeable synonym to likelihood, certainty, risk, odds, etc. I don’t really think many people will agree to the full statistical definition of a posterior probability, which is: the posterior probability, resulting from a prior probability and a given data input.

That is, I don’t think many people involved in AB testing find themselves asking “What is the probability of Variant A being better than the Control given some data and our prior knowledge about Variant A and the Control?”. And that is what Bayesian approaches are designed to provide the answer to.

Below I elaborate on this fact and try to show why I believe adopting Bayesian methods raises more questions than it resolves. I’ll briefly present the frequentist case afterwards.

First of all, writing just P(Variation A > Variation B) is not fair as it appears simpler next to the full expression for the p-value to the right. To correctly state what people want to know is to write P(Var A > Var B | X0), or ideally P(Var A = μ > Var B | X0), where X0 is some observed value of a test statistic X and μ is a specific average value. It could also be written as P(x > Δe | X0). In other words: what is the probability that the average of Var A is equal to some specific value μ, which is that much higher than the average of Var B, given observed data X0.

This is called in Bayesian terms a “posterior probability” and unfortunately, for it to be known, a prior probability on Var A > Var B or Var A = μ > Var B is required, so the question becomes, in reality:

“Given that I have some prior knowledge or belief about my variant and control, and that I have observed data X0, following a given procedure, how should data X0 change my knowledge or belief about my variant and control?”.

Expressed using the original notation it looks like so:

P(x > Δe | null hypothesis) · P(null hypothesis) / P (x > Δe)

or, equivalently in a more verbal expression:

P( Observed Difference between A & B > minimum difference of interest | Variation A = Variation B) · P(Variation A = Variation B) / P (Observed Difference between A & B > minimum difference of interest)

both of which come straight from the Bayes theorem. Compare to the original expression of “P(Variation A > Variation B)”.

Doesn’t look so innocent and simple now, does it?

As you can see, some very tough questions emerge once the full definition is put forth.

Questions like: How do you estimate a prior probability with no prior data? On what grounds should someone agree to your chosen prior? Does the chosen prior allow for new data to count as evidence for or against Var A > Var B and to what extent (how dogmatic is your prior)? What is the associated severity of probing of a particular test? How does information about the data-generating procedure enter into your prior, e.g. the stopping rule? How exactly do you interpret the resulting posterior probability, especially in cases where the prior is improper?

Although some Bayesians would like you to think that interpreting posterior probabilities is easy as cake, while p-values are non-intuitive as hell, the truth is quite different as Prof. Mayo eloquently demonstrates in her response to a criticism on interpretations of p-values.

The hard questions above result from mixing subjective beliefs, background knowledge or utility functions in with the reported statistics. A point of significant contention in the case of AB testing is how to choose a prior in a situation of no prior data about your variant and your control. It is hard simply because priors are supposed to represent knowledge, not lack of it.

In such cases Bayesians try to come up with “objective priors”, also called “non-informative”, “uninformative”, “vague”, or “weakly informative” priors. The issue of representing lack of prior knowledge in a Bayesian framework is still one of heated debate, as visible from the Wikipedia article on it.

To briefly sum up why, let’s consider a naive, but intuitive approach which is to use flat priors, that is to say that “all possible hypothesis are equally probable”. However, that is obviously very different from saying “I don’t know what the probability of each of these possible hypothesis is” and has significant implications in the resulting inferences and experiment conclusions. It is accepted by most that such priors are in fact vastly informative as their effect on the posteriors is incompatible with lack of prior knowledge. Some approaches try to come up with non-informative priors based on them having good frequentist properties (MLE-based or similar), but then the question is why not just use frequentist approaches to problems where no prior information is admissible and we have an experimental setup to boot?

The way a frequentist approaches inference in general is to separate data and statistics from belief, prior knowledge or utility as much as possible. That’s why a frequentist statistic (e.g. a p-value) can only give you an error probability bound, nothing more, nothing less. It doesn’t give you an automatic inference by itself, no matter how tempting the idea of having an automatic decision making machine might be. What conclusions you draw from a statistic and what decisions one makes is to be addressed in the test design and depends on the task at hand. A “low” p-value / “high” confidence should not be used as an automatic true/false classification device as proper conclusions and decisions from a test depend on more than the observed error probability. (“low” and “high” in quotes to signal the implied question “Compared to what?”)

Whether a given error probability is good enough for us is something that should enter into our thinking during the test design phase and it shouldn’t have anything to do with the objectivity of our statistical measures after the test. This separation of data and its error statistics on one hand, and the decision whether it should be accepted as good evidence in support of or in refutation of a hypothesis is what makes frequentist statistics generally easier to understand, communicate and compare, when put side to side with their Bayesian counterparts.

A second issue with Claim #2 is that it’s a bit strange to hear that marketers care about “Var A > Var B” without mentioning a minimum difference of interest, given that the effect size is of crucial importance to the interpretation of the result in light of the end goal. That is, from a utility standpoint a trivial (given the current case) difference between Var A and Var B is not going to be viewed the same as a substantial difference that would have a significant impact on the business’ bottom-line. A business won’t be willing to commit equal resources to gain a 0.01% lift compared to a gain of 5% lift.

The difference in practical outcomes in both cases can easily be the difference between the decision to continue or halt A/B testing altogether!

With this in mind, we can say that ideally the end-user is interested not in P(Var A > Var B | X0), but in P(Var A = μ > Var B | X0) where Δ(μ, Var B) is non-trivial. It is possible that the > sign was intended as a shorthand for a non-trivial difference, but it would be nice to have that explicitly stated, especially given that mistaking statistical significance with substantive difference is a common criticism on the interpretation of frequentist statistics, AB testing included…

Finally, in frequentist terms the p-value computation is not that of a mathematical conditional probability, as depicted by the “|” sign. It would be quite odd to mathematically condition the probability of something on a hypothesis being true. In probability theory one can only condition on things that are random, but a hypothesis is not a random variable or event as it is not something that can happen. It is either true or false.

The p-value is in fact a computation of marginal probability of an event given a statistical hypothesis and a statistical model. The error probability is therefore deductively entailed by the probability distribution and the hypothesis in question. Thus the frequentist case should more properly be expressed not as P(x > Delta(e) | null hypothesis), but as P(x > Δe ; null hypothesis) thus treating the null as given, not as a random variable. It might seem as a small remark, but there are significant inferential implications of changing the notation so I thought it’s important enough to include it in this discussion.

Claim #3: Statistical Tools Should Just Assume the Worst

Customers won’t stop repeatedly peeking, so the statistics must allow this

Optional stopping is a huge issue in AB testing in online marketing. I’ve already done several articles on it (“The Bane of AB Testing – Reaching Statistical Significance“, “Why Every Internet Marketer Should be a Statistician“) and I’m the first to say something should be done about the problem, but I don’t fully agree with both the logic in the above sentence and the way the problem seems to be addressed by some vendors.

We should expect that some people will peek at data, but I believe the right way to address the issue is not to just assume that and treat all people as if they are peeking all the time. That would be akin to treating all people like bad drivers who crash their cars into electricity poles, which is just not fair to those who are good and diligent drivers!

I’m on the opinion that we should instead give people the knowledge about the effect of unaccounted peeking on their conclusions and to also provide them with tools to do it in a controlled way and account for it when analyzing test results. This way users who abide by a monitoring plan will be rewarded with significantly shorter AB test times. If we try to maintain the metaphor, this would be like telling all people about the consequence of crashing into an electricity pole, pointing out the many bad outcomes, e.g. your journey time increases significantly, there will be damage to you an the car, etc., and giving them proximity sensors so they can adjust their course before they crash, should they attempt to steer their vehicle into a pole. That’s a significant efficiency win for everyone!

I’m a fan of giving people a choice to do better than average, and rewarding them for it, instead of treating them all as doing worse than average. Of course, users need to be made aware of the trade-off: the more you peek, the more time and resources you should be prepared to invest in the test, should you run into a worst case scenario, but ultimately it’s the user who should make the decision about how much or how often they want to peek.

Conveying this can be as easy as showing how much time it would take, on average, and in the best and worst cases, if they choose to peek a given number of times during an A/B test.

Claim #4: Statistical Tools Must Convey Uncertainty to the User

Customers need to understand the uncertainty of the estimates

Absolutely, that is part of my argument against Claim #1 above. That’s why estimates of the p-value, confidence interval and percentage lift should be provided, adjusted accordingly. Decision guidance can be provided as well, to the extent of a proper interpretation of what the data is evidence for and of what it doesn’t warrant.

I don’t see how Bayesian credible intervals are any better in this regard than their frequentist cousins. I’m willing to go further and say that in the case of a closed system for which you don’t even know the priors being used, they might actually be worse from the point of an end user.

Claim #5: We Should Not Allow Customers to Make Mistakes

Customers are not experts and will make mistakes if we allow it

I agree that most customers are unlikely to be experts in statistics, but I don’t view that as a necessity for proper usage of a statistical tool. I think basic education and a tool design that helps guide the user in the right path, has clear wording and offers contextual clarifications is what we should strive for.

The fact that users will probably make mistakes, if allowed, is logically true, but I don’t think we can jump from that to being willing to pay a certain cost in order not to allow users to err or cheat. No matter what statistical framework one is operating under, any attempt to prevent “mistakes” or “statistical cheating” comes at a cost for users. Since the tool is not aware of actions not captured in the available data, it must assume that everyone is making mistakes all the time, if guarantees against such mistakes are to be given.

The cost of doing so is that users who don’t make mistakes and don’t try to cheat are punished by worse than optimal performance in their A/B testing efforts (e.g. slower tests). I would say that they are in effect “paying” for the potential mistakes of others and I don’t think this is fair to them. Why should some users be penalized with worse performance for deeds they didn’t do and didn’t even had the intention of doing?

I think if enough is done to assist well-intentioned users in making proper choices pre-test and in doing proper inferences post-test, then the job of the tool creator is done.

The above was my response to what are presented as key points in the decision of VWO® and likely others to turn to Bayesian approaches and my reasoning for why I think they are a poor justification for abandoning frequentist approaches for Bayesian ones. Thoughts on my take on the issue are welcome in the comments below.

As a matter of full disclosure: I’ve recently proposed a flexible and efficient statistical method for conducting A/B and MVT tests in a fully frequentist framework – the AGILE AB Testing method. You can check out my whitepaper and, of course, our AGILE statistical calculator that helps you apply it easily in practice.

Update Feb 28, 2020: A sort-of part 2 of this debate dealt with rampant misrepresentations of frequentist inference in the Google Optimize Help Center. Part #3 of frequentist vs Bayesian revisits two of these issues and adds more to the list: Frequentist vs Bayesian Inference.