This article deals with the problem of Google Analytics referrer spam (prominent referral spammers are semalt, darodar, buttons-for-website). I explain why and how it actually happens, why currently proposed solutions fail and I describe a working, albeit not perfect solution for the issue.

Contents (click to jump to that part):

- The problem of Google Analytics referrer spam

- How does referrer spam work?

- How NOT to fix referrer spam

- A working solution (part 1)

- Solution part 2

The problem of Google Analytics referrer spam

A lot of webmaster forums and blogs are lit up by a fairly new issue: Google Analytics referrer spam. That’s basically phony traffic appearing in your analytics reports that says that users came to your site referred by a domain that doesn’t really have a link to you. You, curious of the traffic, which often also has a 100% bounce rate, go to the referrer URL, only to be bombarded by ads or worse: malware, sneaky affiliate redirects, etc. Some of them want to sell website owners, hungry for traffic, their online marketing services.

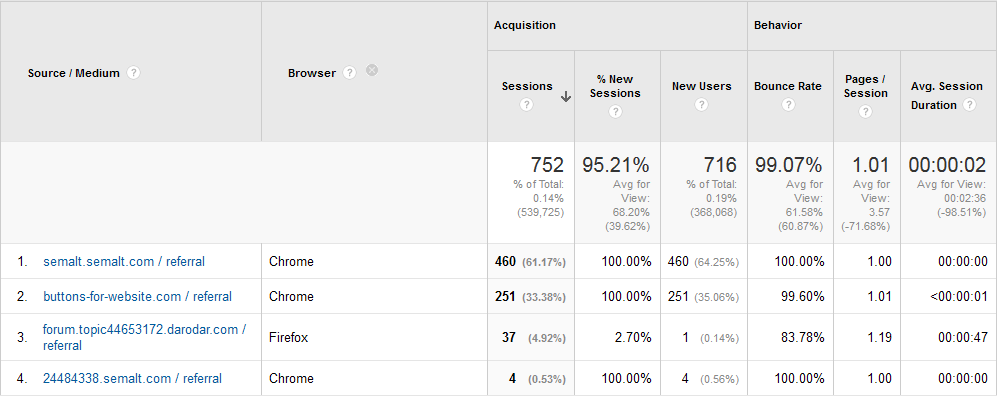

Here is what it might look like for you:

Here we see some of the worst offenders: semalt, darodar and buttons-for-website. If you are seeing referrer traffic from such domains, be sure that this is referrer spam. Ideally you SHOULD NOT VISIT those URLs to check them out. If you visit, make sure to have a good anti-virus software or use a virtual machine.

Why is such traffic a problem? First, it wastes your time, just as plain old e-mail spam. Second, it pollutes your stats and skews your metrics, especially if you have a site with fairly little traffic (which is most sites). Third – see above.

How does referrer spam work?

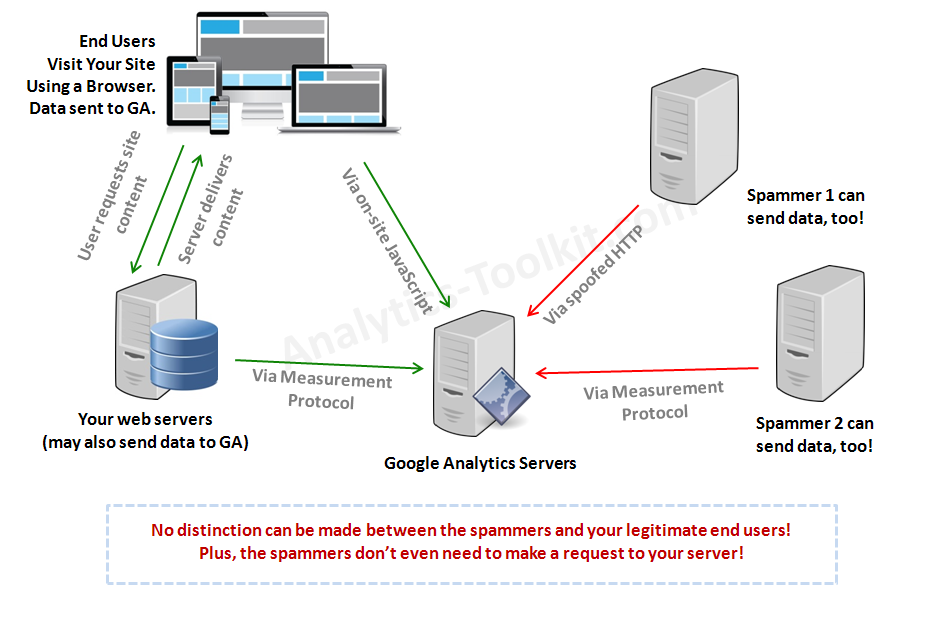

Many people assume that since a session is registered in Google Analytics that means somebody – a person, or a software robot, visited their site and that visit was picked up by GA. They would be wrong in the case of referral spam and in general. Google Analytics relies on the client’s browser to execute a certain piece of JavaScript that makes an HTTP request to the Google Analytics servers, which then register a hit on the website and sets up a cookie on the user’s browser. However, the HTTP request can be sent by any device connected to the internet and if it contains all requisites it will still register a hit with Google Analytics.

Furthermore, not so long ago Google announced the Measurement Protocol which provides an interface for a server to communicate to Google Analytics directly via an official protocol. This was possible before, but was not documented. It’s intent is to allow companies to connect different systems to Google Analytics and to collect offline data to accompany the data, collected by web surfers. Examples of systems that would use the protocol are phone-tracking solutions, POS systems, offline reservation systems, CRM systems, etc.

However, since there is no authentication in this whole process, virtually anybody can send hits for your website, just by knowing your UA-ID (UA-XXXXXXX-XX). This is exactly what spammers are using to pollute your data.

Here is a scheme of how all this works:

Everything can be spoofed (forged) during these interactions. Hostname? Yes. Referrer data? Yes. Campaign tracking data? Yes. URL paths? Yes. And so on, you get the idea. This is well documented in the Measurement Protocol parameter reference guide.

As you can see on the scheme above, a spammer doesn’t even need to connect to your server in order to push his referrer spam. He’s connecting straight to the Google Analytics servers, thus leaving no trace of his activities on your side. This also makes it impossible to cut him off by standard security measures on your side.

How NOT to fix referrer spam

Now that we know how the spam is actually done, let’s quickly go over some solutions that I see proposed at several blogs and forums and why each of them will not help you:

1. Apply a GA view filter in by “Referrer” to exclude traffic from these referrers.

-> This doesn’t work as they are not setting the referrer field, rather the Campaign Source field.

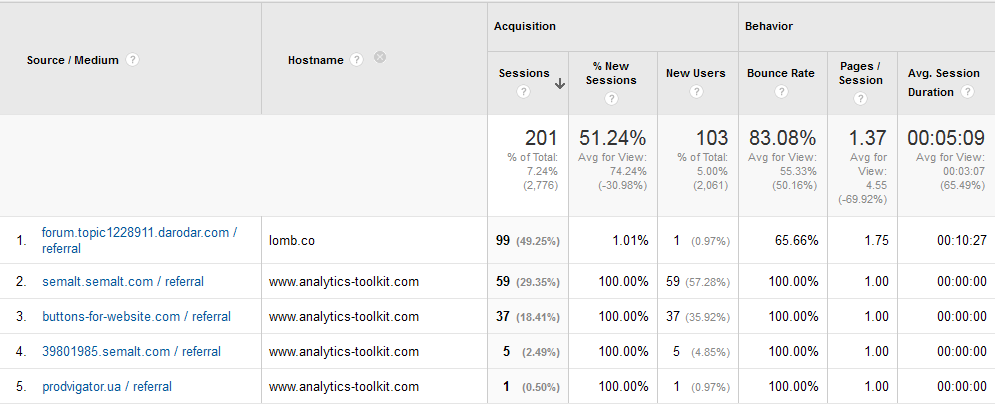

2. Apply a GA view filter to allow only traffic that originated only on your hostname (yourdomain.com).

-> This doesn’t work as they can easily set the hostname to your own. And they actually do:

As you can see, most of the spammers faked the hostname with ease. The rest will soon follow…

EDIT: see Solution UPDATE below.

3. Exclude the traffic from the “Referrer Exclusion List” (if you are using Universal Analytics).

-> Referrer Exclusion doesn’t work like that. It’s for preventing sources from starting a new session while a current session is active, e.g. third-party-hosted shopping or payment solutions like PayPal.

4. Any kind of server traffic monitoring and attempts to exclude traffic on the server level (htaccess, firewall, etc.): by user-agent, by referrer, by IP…

-> Since no interaction with the server is required for the spam to occur, these are all pointless.

5. Use the bot-filtering feature in Google Analytics

-> Tested: doesn’t help one bit with those referrers and I don’t think it was created with such intentions in mind.

6. Exclude whole geographical regions that are deemed responsible for the spammy traffic

-> A drastic solution, but sadly it’s likely that it won’t work, since the geographical data can also be spoofed via the Measurement Protocol. Also, such an option is not available to all website owners.

7. There is a curios approach that relies on the observation that currently spammers only fake visits to your homepage: “/”. It suggests changing legitimate requests for the homepage to a different URL using simple customizations of the Google Analytics javascript. Putting aside the many inconveniences this causes for an analyst, it’s very, very easy for a bot to just send data to a different URL that “/”, so I would not recommend going this way, even if it may work initially.

A working solution

(Last Update August 6-th, 2015 – updating further is impossible due to large volume of spammers and the multiple, constantly changing filters required to keep them out. Use as a starting point only.)

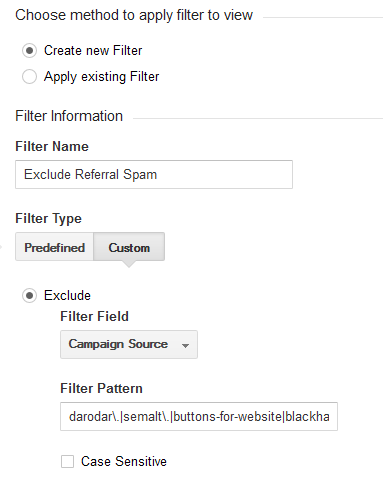

Currently, the best solution is to apply view level filters to exclude referrer spam (not retroactive) and also report filters and advanced segments (retroactive). You can use this regular expression to block a few of the most annoying spammers out there (pretty much every site I have access to is affected by at least two of these):

darodar\.|semalt\.|buttons-for.*?website|blackhatworth|ilovevitaly|prodvigator|cenokos\.|ranksonic\.|adcash\.|(free|share|social).*?buttons?\.|hulfingtonpost\.|free.*traffic|buy-cheap-online|-seo|seo-|video(s)?-for|amezon

You need to construct a filter as shown in the screenshot bellow:

Or, alternatively, you can construct a custom dimension using the same expression. If you spot a new referral spam domain you need to add a vertical line (“|”) and then the name of the domain. Escape dots by adding a backslash (“\”) before them. As you can see I prefer not to add the whole domain, but just the unique part of it, but this would vary between domains.

Unfortunately, as you probably understand, this is not a permantent solution, since we are ultimately entering a game of exhaustion. How fast and how many spam domains can the spammers register? How fast can you add them to your filter’s regex? How much productive energy will be lost in this uneven battle: it takes a spammer an hour to set up a new domain and start spamming; it takes hundreds of thousands of analysts/marketing specialists/webmasters significant time to weave through stats, to identify suspicious traffic and to update filters accordingly… This is why that’s only a short-term solution.

If you are managing more than a couple Google Analytics accounts we would strongly recommend checking out our fully automated tool for tackling the problem: Auto Spam Filters tool . It eliminates & protects against referrer spam & other ghost traffic. It’s a set-and-forget, 1-click solution that works across 100s of properties and views. The filters are frequently updated for protection against new spammers that inevitably show up.

UPDATE: Solution Part 2:

(April 29-th, 2015)

Since referrer spam is getting worse day by day and Google has not come up with anything to help us deal with it (see the end of this post and my other post on the issue linked below as to why) and we are forced to manage this avalanche ourselves, I’ve started deploying an additional filter to client’s accounts in order to prevent some of the new spam from coming in. This filter INCLUDES ONLY traffic with the hostname field set to a predefined set of values. Yes, it can be spoofed, but the less sophisticated referrer spammers that just leave this field to (not set) or who set a random domain name here will be filtered out. And there are a good enough number of these to justify setting up such a filter.

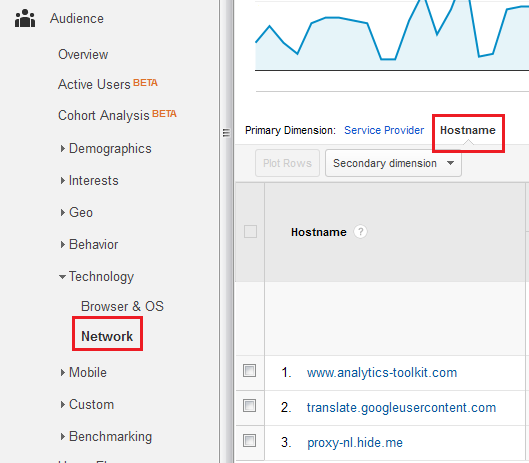

Here is how to set it up:

1.) Go to your Hostnames report and select a date range of a month or more. This will show you the hostnames you need to consider for inclusion.

From the screenshot above it is obvious that I only need to include www.analytics-toolkit.com for this particular view. I would not recommend including translate.googleusercontent.com in most cases even if that means losing some stats, as more adept spammers will just use this hostname to bypass the filter, without the need for a cralwer or a third-party UA-ID to hostname database.

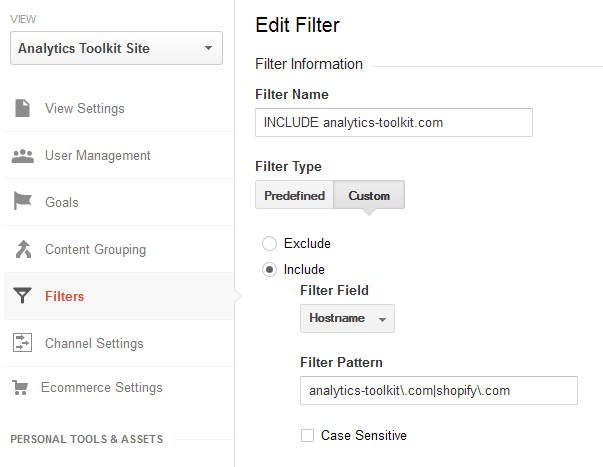

2.) Construct and apply the filter.

You would want to use some basic regex here. For example, if I want to include all traffic to analytics-toolkit.com and a third-party shopping cart, hosted on shopify.com for example, here is what that would look like:

analytics-toolkit\.com|shopify\.com

DO NOT APPLY the above filter to your site without modifications!

It is very important to get this filter right, as otherwise it might result in missing statistics. Also, it does require some mentainance: e.g. when moving domain names, when adding new domain names (third-party hosted shopping carts for example), etc.. As always, keep a view with the raw data just in case.

Again, if in your line of work you are managing more than a couple Google Analytics accounts (e.g. you are a digital agency, CRO agency, etc.) we would strongly recommend our newly launched fully automated solution to the problem. You can now use the Auto Spam Filters tool to eliminate & protect against referrer spam & other ghost traffic. It’s a set-and-forget, 1-click wonder that works across 100s of properties and views. The filters are frequently updated for continued protection.

Is a long-term solution even possible?

If an authentication mechanism can be used to authenticate who can send data to which UA-ID, then, yes. However, the implementation of Google Analytics – no matter if it’s the usual JS tracking or via the Measurement Protocol ultimately relies on an unidentified client machine to send the HTTP request. Thus, any such request can be spoofed/forged and there is no workaround for this that doesn’t require altering the very core of the Google Analytics tracking functionality in a very, very significant way.

Google knows about the issue from as early as 2013 (likely much earlier) as confirmed by a reply from Nick Michailovski – one of the core people in the Google Analytics team, in a Google Groups thread. His exact quote:

We’ve looked at this. If you are doing a client-based implementation, as long as you can get the http request from the client, then you can spoof the request.

So unless Google decides to dramatically change the very core of the tracking part of GA, we are left to deal with the issue of referrer spam ourselves. And there is precious little we can actually do…

Does it end with referrer spam?

The exploit that is used to send tons of referrer spam with ease can be used for much more malicious intents. The integrity of ALL your Google Analytics data is at risk and it appears that a willing and able attacker has vastly more options to attack than you do to defend yourself.

However, this is a topic for a whole new article: Malicious Attacks on Google Analytics Data Integrity.

Great explanation of the problem. Big oops for the Achilles heel in Google Analytics. But don’t be ridiculous. Of course there is something you can do. OWN YOUR METRICS. Take responsibility for your web site, your web server and your web log files. By all means use Google Analytics, it’s free and powerful, but it is limited as well. And if that’s all you do and for most webmasters and most websites who cares if there are some anomalies in the stats that can’t be explained? It’s not a big deal. But if it is a big deal for you and your site and all you’re using is GA, then you’re just being lazy. Learn how to read and analyze your own web logs. You can do it. You can do it manually – look at them in a text file and start getting to know them, or you can do it with a database (Access reads web logs very easily, SQL databases can import them), or use a tool that is designed for the job like Webtrends or use one of the open source web log analyzers. Come on!

Well, that’s how I started 8-9 years ago, but web logs are really limited in what you can extract from them. Also, most software was a lot harder to use in terms of usability, at least back then… Anyways, I don’t think we’ll get back to weblog analyzing as a primary tool and I’m fairly sure a lot of servers have something like Awstats running daily, but it still doesn’t help you, even if you identify that you are under attack. You can check my next article for more on this (linked to from the bottom of this one).

On a analyst point of view, I am not very much interested in referral that have 100% bounce rate and 00:00:00 session duration. This is a common advanced segments that I use to exclude them from my results.

Now it remains that one should not try to identify the website that is under the referral link. But why should we?

This is the referrer spam we see now, but it can and will likely be altered so that bounce rate is not 100% and session duration is a couple of minutes. Also, many people would like to explore why a traffic source with visible volume is having 100% bounce rate – maybe the reason is in the site or maybe it’s the intent the user is sent to the site with – which you can’t estimate unless you visit the site.

Also, this is just like e-mail spam. Not everyone is as savvy as us and the percentage of gullible or simply uninformed webmasters/marketers/analysists/business owners needed to sustain such an operation is likely not that high…

Unfortunately I have to agree with you.

Do you have any contact with Avinash Kaushik? I search his blog about referrer spam and couldn’t find a word over the subject. Wired no?

I am going to tease him on that.

Teased him about it on LinkedIn, no luck…

Good post, Georgi. One clarification, though: filtering on your own hostname DOES work, and it works really well. It just doesn’t work against the bots that actually visit your site like semalt and buttons-for-website and all those .ua domains. I know they visited my site because they triggered event tracking I use for time-on-page measurements.

So far, all of the ghost referrals are still using incorrect hostnames. While they could use your real hostname, doing that is still a significant effort they haven’t made yet.

In the meantime, I didn’t have to worry about adding a filter for social-buttons.com and 5 of its predecessors.

Well, Mike, how would filtering for my hostname work if they really visit my website? Won’t that also mean that they’ll be using the correct hostname, as shown in my screenshot from the GA account of this very site? As you can see they have the correct hostname (doesn’t matter if they visit or if they use MP) so what hostname filter do you propose that would filter these out?

Since few days i was getting unwanted traffic from referral source. I dont know exactly how i am getting this traffic. After doing some research and analysis, i found that these are spams.

Now the time to remove spam from my google analytics,

thanks for sharing this informative post.

I am growing so tired of this. As a consultant who maintains a slew of sites Google needs to have a top level control, not just site wide but administrative wide. Otherwise every time a new variant of these are found it takes an hour or more for me to go through each and every filter/rule/setup and push new changes down.

It’d be great if Google would be proactive and make it easy to eliminate things like these with a click. For years now I’ve been hopeful. Growing tired of being hopeful. 😉

Absolutely, Andy. That’s part of the reson we’re trying to bring attention to the issue.

I second that!

Have you noticed that these spam URLs are somehow notified when an account change happens? That is somehow triggers something. I have had two clients change their accounts/UA codes recently and were subsequently bombarded with spam URLs.

Not really, but I’d be interested in hearing more about it 🙂

Great article, but I get this error.

Verification filters

This filter would not have changed your details. The filter settings may not be correct or that the data set is too small.

Any tips?

Thank.

This is completely normal in some cases. Maybe you have not received spam from these sources in that view for the past 7 days, or maybe your site has too much data and the sampling even for 7 days is too high to detect the filter change.

Hi, thanks for tips, I was able to get rid of most of the fake referrers using the ‘campaign source’ filter, however some of them are still slipping through with hostname (not set). Is there any way to filter it out? Thanks

You can try adding an additional filter to include only visits from the hostnames that you know are legit for your site. It will prevent a portion of the new spam from coming in. I’m starting to do that on some properties now, as a preventative solution. I’ll probably update the article to include that as well…

Hi, thanks for the great article. However I followed your instructions exactly and I’m still getting all the referral spam appearing in my data. Any idea what I’m doing wrong? I can send some screenshots if it helps?

OK it was working but I had assumed that the filter would apply to previous data as well (I’m pretty new to using filters). Might be worth pointing out in the article for other rookies like me.

Again thanks for the article!

I did point it out: “Currently, the best solution is to apply view level filters to exclude referrer spam (not retroactive) and also report filters and advanced segments (retroactive).”

Why ‘Campaign Source’ and not ‘ISP Domain’ in the filter?

Cause ISP Domain has nothing to do with referrer spam, or it least nothing relevant to blocking it…

Thanks a lot for this article, very informative and straight to the point

Thanks for the useful article. Amongst the possible solutions I have contemplated – for example, simulating the analytics.js calls but making the target the originating web server, excluding the UA-ID from that submission, then reinject the UA-ID in a Measurement Protocol post from the web server to the GA servers (with some suitable session keys between webserver and browser) – the main thought I had was: are the “spammers” merely brute force submitting their junk by cycling through sequentially generated UA-ID values or are they visiting sites at least once in order to learn the real ID? Either way, it suggests that Google can only deal with the problem long term by introducing authentication (as you say) involving the originating webserver to exchange information not visible to the client (browser). Any thoughts?

By the way, I have another simpler possible solution involving altering the standard GA JS fragment (by adding a custom variable) and then filtering on that variable. It would require the spammers reading / parsing the real site pages to determine that code – they could do it, but most wouldn’t bother. Takes about 10 minutes to set up.

Hey Mark,

Thanks for your comment. I don’t think the first solution will accomplish much and it’s also far too complicated to adopt. Most spammers, it appears, are now just brute-force submitting junk. Some are visiting the sites, but are currently the minority.

The hit level custom variable/custom dimension solution is another one which should work and I am aware of it. It is much more complex to set up than the filters above while it gives small to none improvement in filtering out spam, compared to simple filters as the above. If a crawler is involved it can intercept the customvar and use it in his requests, so it’s not a bulletproof solution. Low to none marginal utility + more complex and more costly to set up = inferior option.

Hi,

I noticed a significant increase in my bounch rate. I think my site is hit by some kind of bot to rise my bounchrate. How can I check? And more important: what to do? Thanks for your help.

Hi Bill,

Check your Technology > Hostnames report for “(not set)”. If present in visible quantities it is most likely ghost hits – spam. In any case you’d want to apply both filters provided as solutions above.

Hi Georgi,

Thanks for this, I’ve read a tonne of articles about this – my knowledge is limited! – and yours has been the easiest to read and follow. You haven’t just given me the answer, you’ve told me what to do if a url that you haven’t included is visiting my site. A new one has just popped up in the last few days and I really appreciate you taking the time to pull this post together for people like me who have limited skills in this area!

You are super.

x

You’re most welcome 🙂 Thanks!

Hello, and thank you for a very informative post.

Can you please expand a bit regarding how (not set) should be dealt with?

Thank you!

This is the second part of the solution above. Just apply it and you should be fine.

OMG!!! Thank you soooo much..

I have been looking for a solution and got it finally.

I have a doubt though, why is the source set as campaign source? Does that category include traffic from any source???

And does this have anything to do with our server? because i have been seeing same traffic in all of my sites (in same server) 🙁 . It seems like a contagious thing for me.. Can you please share your thoughts?

Thanks again. I am definitely trying this!!

Hi Mivid,

If you read through the post you’ll see that it has nothing to do with your server and I explicitly say so. Yes, you need to filter the campaign source dimension since that’s were the spam is appearing. Make sure to apply the second part of the solution as well (the INCLUDE your domain filter).

Cheers

Thanks a lot Georgi that’s really useful. I too was looking at filtering some of my clients data by Referral and using the URL as I have quite a specific list of persistent spammers. Campaign source was a better idea – thanks!

In my opinion the only real solution to this is in the hands of Google, it’s their tool and this spam is devaluing it. I’m sure that many small business folk do not want to waste valuable time modifying files, setting up filters etc etc. So more and more will stop using GA.

I have found my own solution – stop looking at the reports!

Hi. Why is it not working for me??

I’ve tried putting filters like yours on but I still get the same (“excluded”) spam show up in my reports! I don’t understand. I am following the guides exactly. And this is after more than 30 days of filtering. Thanks

Hi Antra,

Sorry, I can’t help with a general question like this one. The solution is above, maybe check again to see if your’s is correct to the letter? It works for tons of views that I and others applied these filters to…

Georgi

Hi,

I’m far from an expert on this topic so I’m afraid i’m doing my best with a layman’s understanding, but when I tried to do the INCLUDE filter for my site name is filter everything that wasn’t a .com. Thus if anyone views my site via .co.uk, .fr, .ca etc. it gets excluded from analytics. Is there a way around this?

I don’t understand why you would do that. The instructions in point #2 of the solution above should be fairly straightforward to follow so that you have a proper include filter by hostname.

Hello Friends.

Thanks for the above information.

I have an issue about Referral unwanted traffic. I want to remove the referral traffic from the website, Below is pattern which I have used for to remove the referral traffic.

1) Admin > Filter > Filter Type > Custom

2) Select Exclude

3) Filter field > referral

4) Filter Pattern – trafficmonetize\.org|4webmasters\.org|webmonetizer\.net|dailyrank\.net|timesjobstrafficmonetize\.org|4webmasters\.org|webmonetizer\.net|dailyrank\.net|timesjobs\.com|indeed\.co.in|100dollars-seo\.com|recruit\.net|semaltmedia\.com|best-seo-offer\.com

While verifying, I am getting massage “This filter would not have changed your data. Either the filter configuration is incorrect, or the set of sampled data is too small.

To create successful filter, Is verification need to happen successful

Anyone please help me to understand the massage, check the filter pattern and how would I will create filter successfully

Yes, this would not work. Please use the solution in the article above and you would have no issues. You need to exclude based on “Campaign Source”, not on “Referral”.

Great post. Thanks. I have been seeing a lot of spam traffic from www1.semalt.com, www2.semalt.com, 324422.semalt.com, etc. How do you filter out all of the sub-domain variations of a domain? What would the regular expression look like? I’m assuming there would be some sort of wildcard used? Thanks a lot.

Just apply the filters in the post, they’ll take care of all of these subdomains 🙂

Hi,

thx for this post!

I’m filtering out the spam in my accounts exactly as you described here. However today I stumbled across an annoying issue with analytics.

I kept updating my RegEx for the filter and adding new spam domains and today GA told me that the filter field should only hold 250 characters.

Did anyone here came across this problem?

I believe to solve that I have to create a new filter for the same view, but that really is somewhat annoying.

Well, I’ve not run into this issue since after you apply the two filters above you shouldn’t have spam left. Also, you can revise old regex-es in order to shorten them. I’ve done so acouple of times myself. If you, however, go above the limit, then you need to create and apply a second filter. I didn’t have to resort to that, for the moment, however.

Hi Georgi

great article! I found similar solutions on different sites before I found your website.

The filters work great, but new spam sites showed up that were not filtered so I kept looking for “the perfect” solution.

Then I found a website that suggested to just create a new Google Tracking Code.

They also wrote that spam referrals obviously look at tracking code ending with -1

So I tried it! What have I got to loose?

I have created a new Google Tacking ID ending with -2 and replaced it with the origin one.

Before the change, I’ve had all the sites you mentioned, like social-buttons, hulfingtonpost, darodar, etc.

Now when I look at my referrals, I have no spam at all. Only the ones I can explain, like comment links, forum links, etc. (i changed the tracking code in April, so I have three months of data).

I have to admit I am not an SEO expert and don’t know if this method has any side effects, but it just works great and it is so easy to install.

So let me know what you think and if you have tried it.

I just wanted to share my experience.

Hi Thomas,

This is patently not true. I’ve access to hundreds of GA properties through my line of work and I can assure you that all UA-IDs get spammed, not just ones ending on -1. I do see variation between sites and spammers definitely have varying approaches. Even if it helps in some cases, it’s not a viable solution for most, since you’re instantly losing the ability to easily compare data after the switch to data prior to the switch (barring the work you’d need to do, which is a lot in more complex setups).

So the -1 is a myth. Do some sites get more spam than others? Certainly. Why? Can’t say for sure. Would I recommend switching Tracker IDs? Nope.

Hope this helps,

Georgi

Hi great article, can you explain the following 2 lines, the differences between them and what they’ll filter out.

buttons-for.*?website

share.?buttons

thanks.

Thanks Brian. The two buttons filter a ton of similar websites, non of which are likely to be sending real traffic to anyone. These are broad so that even if the spammers create new similar domains, they’ll be automatically filtered out, no need to update the filter for each domain.

Thanks for this comprehensive article Georgi. My own small website is around 80-90% referrer spam these days.

Google Analytics View Filters seem like the way to go but I’ve had no luck with them. Tried various methods but they never seem to work and I’m stumped as to why. I’ve tried to get help from Google but no joy.

I’ve created a new view (to preserve my main view, which includes all the spam). I created a filter to exclude the usual suspects but that results in no data at all appearing in my report. Assumed I’d made a mistake in my filter expression, so removed all filters. The new view still shows no results – when I assume it should look exactly like the main view at this point. Weird. Makes them unusable as a solution for me. I’ve deleted the view and started again but I get the exact same results.

Is this something you’ve ever come across?

Hi Kristy,

No, not really, you’re probably doing something wrong. You can check out our new automated tool that can apply the filters and keep them updated for you: https://www.analytics-toolkit.com/auto-spam-filters/ .

Cheers,

Georgi

Hi Georgi and thanks for your great site!

I am planning to purchase your product but the problem is following:

Few days ago I went to our google analytics account and saw it is obvious that most of the traffic is spam. I went to add filter first accidentally to the ACCOUNT. Then I went to add some more filters to the view (trying to exclude the spam). Unfortunately I read only afterwards that it is highly recommended to create a new view and put the filters to the new view and remain the original view just in case.

Unfortunately I read this only after I had already put the filters to the original view and account 🙁

So now it shows no traffict at all to our site. Today I removed all filters ( I originally se the filters to exclude countries).

So what I am asking is how big problem is this? I only got to know later that the filters are permanent! I newer thought you could not remove a filter that has been set before! I hope Google analytics starts showing the real stats again (including the spam) after I removed the filters. If it starts swhoing the stats again I could purchase your product.

Any advice on condidering my concern would be highly appreciated.

Thanks!

Hi Tuomo,

I don’t think I can help with that, as this requires me to have access to your GA account so that I can see what you’re doing wrong (likely reason for the issues you’re having).

If you remove all filters you should see your stats again. If you still don’t see them, then that’s a different, likely technical implementation issue…

Cheers,

Georgi

Thanks a lot. I tried several other methods before I came this article and it’s the only fully ready option to filter spam. Other solutions just simply doesn’t work.

Hello Georgi, thank you for the post is very informative!

I just realized I have this problem, I noticed the last couple of days a huge increase of entries and I just looked for the hostnames as you suggest, and it says there are only around 600 entries coming from my host and another 1000 entries by other hostnames. But there are one thousand entries each one with a different hostname…

I’ve never had this problem and I would like to ask you two or three questions since I’m a little worried.

1) I just published an update of my site, basically the exact same day this started to happened. I added 21 more pages to my site (I saw Web Master Tools and says Google has not yet indexed them — but I saw something curious, last night said it un-indexed 11 pages). Is there a chance that this is not SPAM or ghosts, but Google or something? Sorry if this question is stupid I don’t know much o any about developing (I publish my site through Everweb, which is a drag and drop web creator)

2) Could this SPAM do some damage to my site’s position in organic search results? Or it only affects my analytics statistics? and 3) Is this SPAM goes away if unattended? Is it temporary?

I appreciate very much your answer… And thanks again for the post. I’ll maybe wait for answer before to put the filter you mentioned.

Hello Roger,

I suggest you go over the article and other articles on the topic on our blog – the answers are all there. Still, you could be experiencing something else, I can’t tell since I can’t see what you are dealing with. And the short answer is 2.) No, 3.) No.

Best,

Georgi

Hi Georgi, thanks for the great and informative article. I have a suggestion that might fix the referrer spam issue.

As far as I can tell the only reason bots are able to create spam referrals is because a websites Tracking Id is embedded in the HTML, this is required for the google analytics library to work.

Why don’t we forward the google analytics pixel tracking URL to our own server/application and forward the google analytics pixel tracking request server side. We then only insert the Tracking Id on the server side and thus the Tracking Id is not visible for spammers to scrape.

What are your thoughts on the above-mentioned suggestion?

Regards

Tjaart van der Walt

Hi Tjaart,

That might help if there is no historical data about the association between the UA-ID and the domain. If you’re going to do that, you might just as well use the measurement protocol directly. It is, however, a too complicated solution for most websites.

Best,

Georgi